Explainable AI in finance is reshaping how banks and fintechs view transparency and trust. Over 70% of banks adopting AI cite lack of explainability as a top regulatory concern (Source: PwC, 2024). Explainable AI brings clarity to automated decision-making. It allows financial organizations to deploy AI confidently while continuing to maintain ethics, comply with regulations, and maintain transparency with customers. In this guide, we will discuss how explainable AI in fintech operates, its importance, and how organizations can implement explainable AI in modern fintech systems.

What is Explainable AI (XAI)?

Explainable AI in finance is a focused strategy that seeks to render AI-driven decisions understandable, interpretable, and trustworthy. Instead of being regarded as a “black box” that produces answers without context, financial institutions can see how exactly an algorithm arrived at a decision. This understanding of how AI is working enhances the ability of fintech firms and banks to satisfy regulatory requirements, reduce bias, and demonstrate trust in their customers.

Explanatory AI in finance is different from Generative AI, even though both methods use advanced machine learning (ML). Generative AI is a machine learning method that prioritizes creativity (creating new content), whereas Explainable AI emphasizes accountability and transparency to the user about the explanations being communicated with the content, as either text, images, or code. The accompanying table outlines some of the primary distinctions:

| Explainable AI (XAI) | Generative AI (Gen AI) | |

|---|---|---|

| Purpose | Clarifies and audits AI decisions. | Generates original content from data. |

| Output | Logical explanations for model predictions or actions. | Text, visuals, code, or other creative outputs. |

| Focus | Trust, compliance, and fairness. | Innovation, automation, and content creation. |

| Applications | Credit scoring, fraud detection, risk modeling, compliance reporting. | Chatbots, marketing content, image design, data simulation. |

| Relevance to finance | Aligns AI with ethical and legal rules. | Enhances customer experience and operational creativity. |

Comparison table between Explainable AI and Generative AI in finance context.

In conclusion, explainable AI in finance strengthens the foundation of AI-powered decision-making, ensuring transparency where it matters most, while Generative AI drives innovation and engagement in more creative financial applications.

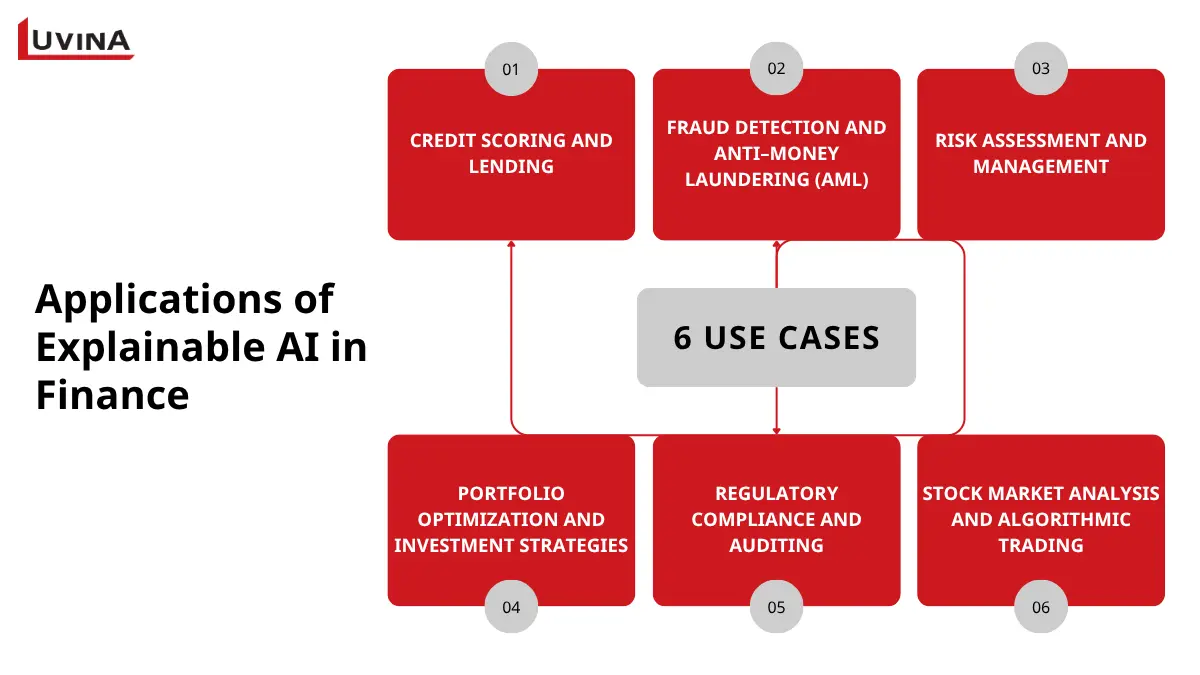

Key Applications of Explainable AI in Finance

Explainable AI in finance increases accountability and openness throughout every level of the financial system. The main spheres XAI is changing financial processes are listed here:

Credit scoring and lending

The most important uses of explainable AI in finance are in loan approval and credit assessment. Techniques like SHAP or LIME show which elements: income, credit history, debt ratio, influence approvals or rejections. This openness enables borrowers to grasp their credit ratings, and financial organizations have a justifiable rationale for every decision. Additionally, it helps to create more just, regulation-aligned lending policies and lowers unintentional bias. For instance, a Japanese fintech used SHAP-based models to justify loan decisions to the FSA, cutting review time by 30%.

Fraud detection and anti–money laundering (AML)

Although their complexity can mask the justification behind warnings, fraud detection systems driven by artificial intelligence frequently examine huge datasets to identify suspect transactions. By pointing out which characteristics – such as odd transaction sums, timing, or location – caused a red flag, XAI technologies provide clarity.

Risk assessment and management

In risk management, explainable artificial intelligence improves the interpretability of predictive models used to evaluate market exposure, investment performance, and credit risk. XAI techniques analyze how variables, including asset volatility, interest rate changes, and economic indicators, affect the results of models. Consequently, financial analysts can have more knowledge of risk profiles and properly modify plans while still being transparent with investors and authorities.

Portfolio optimization and investment strategies

Often based on artificial intelligence algorithms that suggest the best asset allocations or trading strategies, portfolio management uses AI. By showing how specific elements – such as asset performance or market trends – affect portfolio recommendations, explainable artificial intelligence helps to make these models more understandable.

Regulatory compliance and auditing

Explainable artificial intelligence in finance assists with compliance by documenting decision logic and ensuring that the model’s outputs can be audited. It provides regulators with the necessary visibility into automated systems, thereby decreasing the risk of penalties, lawsuits, or ethical violations.

Stock market analysis and algorithmic trading

Tools based on XAI, like feature attribution maps and visual interpretation models, help investors to grasp why an algorithm issues a buy or sell recommendation. This raises faith in artificial intelligence (AI) systems working inside volatile markets in addition to increasing the dependability of trading decisions.

Benefits and challenges of integrating Explainable AI in Fintech systems

Explainable AI in finance not only makes clear how algorithms get their conclusions but also internally boosts the credibility of systems driven by artificial intelligence. Although integrating explainable AI in fintech apps offers obvious strategic advantages, it also presents several technical and moral problems that must be thoughtfully considered.

1. Benefits

Several benefits result from the use of explainable AI that enhance client relationships as well as internal decision-making:

- Regulatory compliance: Explainable AI (XAI) assists financial institutions in appropriately justifying AI-based conclusions, including loan approvals, fraud detection, and credit risk scoring processes.

- Trust and fairness: Push down potential misunderstanding, mitigate bias, and establish long-lasting consumer trust. Transparent explanations allow consumers to understand why specific conclusions or results are reached.

- Enhanced decision-making: XAI identifies significant drivers of model predictions that provide insight to human analysts regarding risk identification, strategy optimization, and generally more informed, data-driven commercial decisions.

- Bias and risk mitigation: XAI will always enable implicit bias identification in data inputs, leading to ethical and regulatory-compliant lending and underwriting conclusions.

- Operational accountability: Helps companies to better monitor automated outputs with corporate and legal objectives by following every artificial intelligence choice to particular data points.

2. Challenges

Though it holds great promise, using explainable AI in finance presents major difficulties, restricting its scalability and uniformity:

- Performance vs. interpretability trade-off: Simplifying models improves explainability but may lower predictive power, especially in deep learning systems for trading or risk analysis.

- Scalability limitations: Many modern XAI tools find difficulty managing large, changing financial data sets or producing explanations in real-time decision situations.

- Regulatory and privacy risks: Giving thorough explanations can accidentally expose delicate financial or personal information; inconsistent worldwide rules make it more difficult to meet compliance demands.

- Complexity of implementation: Building understandable systems calls for specific knowledge, hence raising development expenses and resource requirements for financial companies.

- End user accessibility: Most XAI techniques are designed for technical users and, therefore, business users, consumers, and regulators have a limited opportunity to understand AI-conditioned results easily.

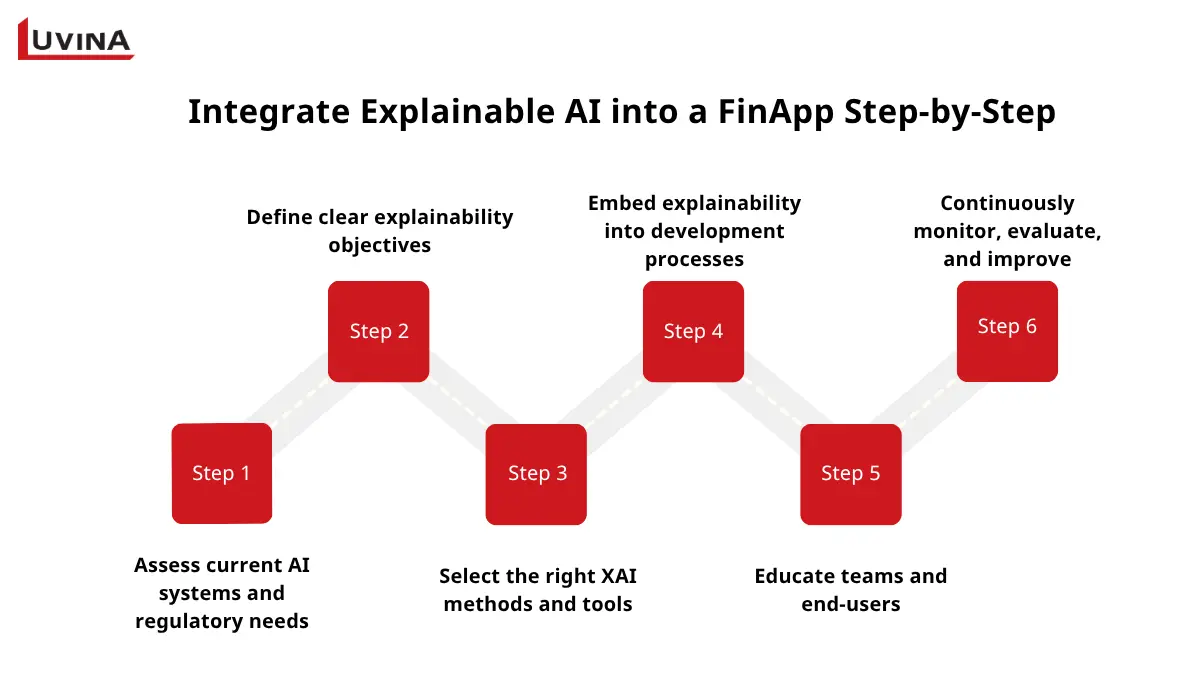

How to Integrate Explainable AI Into A Fintech Application: A Step-by-step Framework

Integrating explainable AI in fintech apps calls for a well-thought-out strategy that merges technical soundness with user-friendly design and regulatory knowledge. A handy framework is provided below that suggests a few steps through which organizations can properly and sustainably integrate explainable AI into their financial workflows.

Step 1: Assess current AI systems and regulatory needs

Start with an examination of all current artificial intelligence models inside your fintech environment. With data scientists, risk officers, and regulatory specialists, find blind spots like prejudiced data or unclear model outputs and assess how each system affects compliance results as well as consumers.

This first evaluation lays the groundwork for a sensible and understandable artificial intelligence plan.

Step 2: Define clear explainability objectives

Define what you want explainable AI in fintech to accomplish for your company. Whether the aim is to increase customer interaction, guarantee equitable lending, or satisfy regulatory requirements, matching explainability with more general business results guides where to initially deploy XAI. Priority ought to go to high-stakes applications like automated credit approvals or investment advice.

Step 3: Select the right XAI methods and tools

Select frameworks and approaches suited for your kind of model and operational requirements.

- LIME (Local Interpretable Model-agnostic Explanations): Simplifies the generation of individual forecasts.

- SHAP (SHapley Additive exPlanations): Quantifying the influence of every characteristic on outcomes.

- Counterfactual explanations: Show how changing input variables affects expectations, therefore supporting model validation.

Add open source resources, like Google’s What-If Tool, Microsoft’s InterpretML, or IBM’s AI Fairness 360, to those approaches to enable transparency and bias detection.

Step 4: Embed explainability into development processes

You shouldn’t consider transparency as an afterthought. Start to include clear artificial intelligence concepts from the first phases of design. To ensure accountability at various levels, encourage cross-functional collaboration between compliance staff, engineers, and product teams. Maintain good documentation in an audit-ready manner that stakeholders and authorities can trust (including justification, limitations, and performance metrics) through the model lifecycle.

Step 5: Educate teams and end-users

Adopting explainable AI in finance requires more than tech upgrades. It demands process reorganization and mindset change. Manage the training for departments on the functioning and relevance of even the simplest models. Supply staff who interface with your customers with clear, human-readable guidance on what decisions involving AI mean and how those decisions were made.

Step 6: Continuously monitor, evaluate, and improve

Explainability is an ongoing process. Use monitoring systems to track performance and detect drift. Over time, they help flag potential biased behaviors. To improve models and explanations, regularly prompt consumers, regulators, and data teams for feedback.

Continuous iteration assures that your explainable AIM systems remain compliant, trustworthy, and in step with the industry landscape and ethics.

Future Trends in Explainable AI in Financial Services

Explainable AI in finance will keep developing as a competitive edge as well as a legislative requirement. Creating more transparent, user-friendly systems that enable professionals at all levels – not only data scientists – will be the next wave of innovation for knowledge of, interpretation of, and faith in artificial intelligence results.

Emerging trends influencing the future of explainable AI in finance are:

- Integration with generative AI: As generative tools are embedded into Finance processes, explainability will be an important component to make transparent how automated insights are generated.

- Custom XAI frameworks: Financial organizations will need solutions that are specific to the domain of finance in areas like credit scoring, investment analysis, and fraud detection.

- Autonomous oversight system: Continuous, real-time surveillance for compliance and risk alerts will increasingly be conducted through autonomous systems, which use artificial intelligence.

- Balanced interpretability models: Using hybrid methods, which utilize deep learning and interpretable algorithms, will allow for a broader balance between prediction performance and explainability.

- Collaborative regulation: Close coordination among financial experts, AI creators, and regulators will help to define changing norms for ethical and responsible AI management.

Conclusion

The article above has explored the full picture of explainable AI in finance – from its definition and key applications to integration frameworks and emerging trends shaping the future of financial technology. Through these insights, it’s clear that explainability is not only a regulatory requirement but also a foundation for trust, fairness, and long-term innovation in the financial sector.

As the industry moves toward greater automation and regulatory scrutiny, embracing XAI is no longer just a technical upgrade but a strategic necessity. Want to build transparent fintech solutions with Explainable AI? Partner with Luvina to design compliant, auditable, and trustworthy AI systems.

Resources

- https://rpc.cfainstitute.org/research/reports/2025/explainable-ai-in-finance

- https://corporatefinanceinstitute.com/resources/artificial-intelligence-ai/why-explainable-ai-matters-finance/

- https://bit.ueh.edu.vn/wp-content/uploads/2024/11/BIT-004_TS_HoangAnh-ThS_PhanHien.pdf

- https://blog.aspiresys.com/artificial-intelligence/exploring-explainable-ai-xai-in-financial-services-why-it-matters/

- https://www.linkedin.com/pulse/integrating-explainable-ai-xai-your-organisations-shinganagutti–fazzc/

- https://www.biz4solutions.com/blog/what-is-explainable-ai-how-is-it-different-from-generative-ai/

Read More From Us?

Sign up for our newsletter

Read More From Us?

Sign up for our newsletter