One inquiry is gaining strength as artificial intelligence continues to transform businesses: Can we truly trust what AI decides? Most AI systems remain black boxes, even to their developers. They can’t always understand how outputs are produced, despite high precision.

Explainable AI comes in here. It helps to close the gap between performance and transparency by exposing the rationale behind algorithmic forecasts and judgments. This comprehensive handbook will examine the underlying techniques and measures of explainable artificial intelligence, as well as its practical applications and how emerging trends are shaping transparent and reliable artificial intelligence.

What is Explainable AI and Its Core Concepts

Explainable AI is a subfield that aims to make AI decisions transparent and accountable. XAI’s meaning is, in essence, the framework that converts unclear machine learning models into understandable, traceable, and reliable systems.

Unlike traditional “black box” models that deliver predictions without context, explainable systems reveal why and how an outcome is reached. At its core, explainable artificial intelligence aims to bridge the gap between complex algorithmic reasoning and human understanding. It provides insights into model behavior – from data inputs and feature importance to the logic behind predictions. This not only helps detect bias and errors early but also ensures fairness and compliance with evolving AI governance standards.

Understanding XAI explainable AI, also means recognizing its role in responsible technology deployment. Offering an obvious rationale for automated results helps businesses to improve user trust, increase accountability, and satisfy legislative demands in fields including finance, healthcare, and cybersecurity.

XAI Methods & Techniques

Designed to reveal how algorithms arrive at their conclusions, explainable AI methods enable both developers and end users to grasp the rationale behind automated decisions. By contrast, explainable AI techniques offer straightforward means by which one may view, assess, and present how input data affects artificial intelligence outputs. Depending on the level of detail needed, they can be applied locally – that is, to particular predictions – or globally – that is, throughout the model.

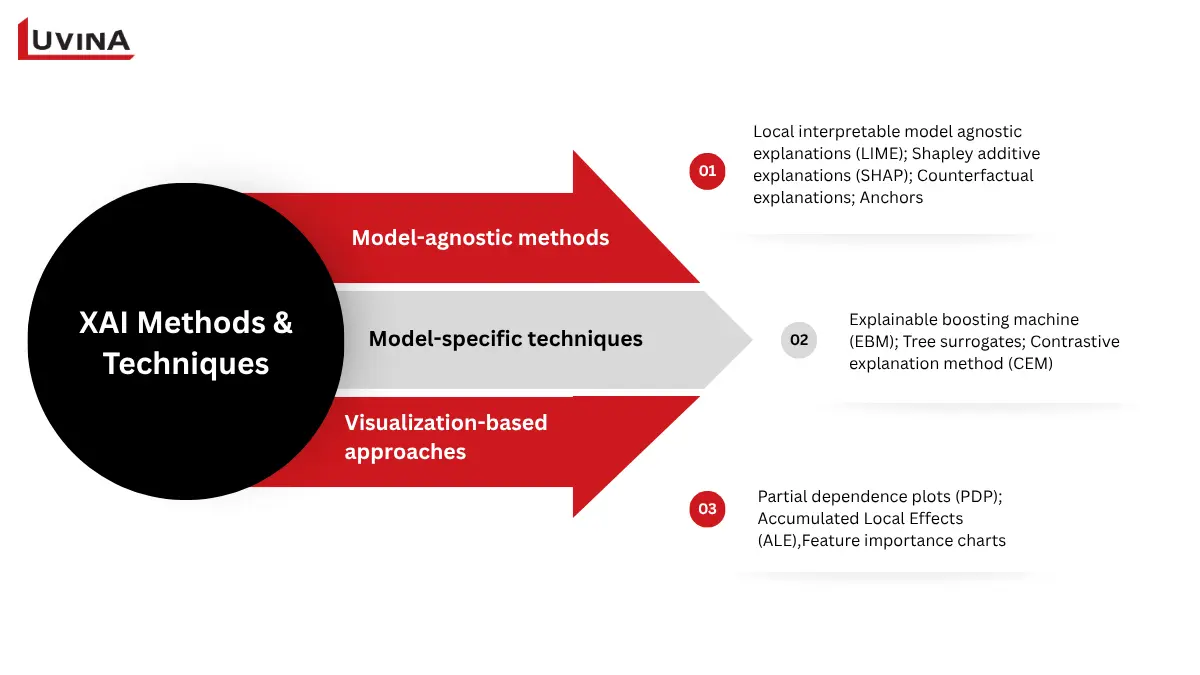

There are three major groups of understandable AI methods and techniques: model-agnostic, model-specific, and visualization-based techniques. The following are the major approaches and technologies:

1. Model-agnostic methods

These methods of explainable AI can be applied to any machine learning model, regardless of its internal structure. They offer interpretability adaptable for a variety of purposes.

- Local interpretable model-agnostic explanations (LIME): Justifies individual predictions by intentionally modifying the input data and then assessing how the model’s outputs vary with the modified data.

- Shapley additive explanations (SHAP): Establish credit fairly to each feature that causes a change from the current prediction based on fair game-theoretic assumptions, so that you can evaluate the local contribution and global influences at the same time.

- Counterfactual explanations: Show how a small change in the input data would cause a different prediction, ensuring transparency to conduct fairness testing.

- Anchors: Local, easy-to-interpret explanations, typically signifying some notion of specific rules, which actively guarantee that the model would have arrived at the same conclusion had the content not changed.

2. Model-specific techniques

These are explainable AI methods designed especially for specific kinds of models to make them naturally understandable and auditable.

- Explainable boosting machine (EBM): Combining the clarity of linear models with the precision of cutting-edge algorithms, EBM provides interpretable results without compromising performance.

- Tree surrogates: Uses simple decision trees to approximate complex “black box” models, allowing for both global and instance-level interpretability.

- Contrastive explanation method (CEM): Highlights which features are crucial or missing for a given prediction, revealing both positive and negative factors behind a model’s decision.

3. Visualization-based approaches

These techniques convert the behavior of complicated explainable AI models into visible insights that are simpler to understand and share.

- Partial dependence plots (PDP): Give a worldwide view of model behavior by showing how variations in one or two characteristics influence the predicted outcome.

- Accumulated Local Effects (ALE): By taking feature dependencies and nonlinear effects into account, ALE catch feature linkages more precisely than PDP does.

- Feature importance charts: Rank input variables based on their influence on predictions, helping detect bias and understand decision logic.

Evaluation Metrics & Criteria For Good Explanations

In order to produce meaningful and trustworthy understanding through explainable artificial intelligence (XAI), researchers and practitioners use a variety of evaluation criteria. These criteria measure the degree to which an explanation is effective, stable, and usable, both from a technical standpoint and from a human comprehensibility perspective.

The following is a summary of evaluation metrics and criteria for evaluating the quality of explanations in understanding AI systems:

| Metrics | What for? |

|---|---|

| Performance difference (D) | Measures the change in a model’s performance after an XAI method is used. A small change indicates that the explanation and meaningfulness arrived at through an XAI method do not sacrifice the performance and reliability of the original model. |

| Rule complexity (R) | In rule-based models, this metric counts the number of rules used to generate an explanation. Fewer, clearer rules generally indicate higher interpretability. |

| Feature usage (F) | Evaluates the number of features or input variables used to construct an explanation. The goal is to strike a balance – too few features might oversimplify the reasoning, while too many can overwhelm users and reduce clarity. |

| Stability (S) | Measures whether the explanations remain consistent when similar inputs are given. |

In practice, these metrics serve different audiences: developers use them to refine explainable AI algorithms, while business users rely on them to verify trust and transparency.

XAI Models & Examples: From Simple to Complex

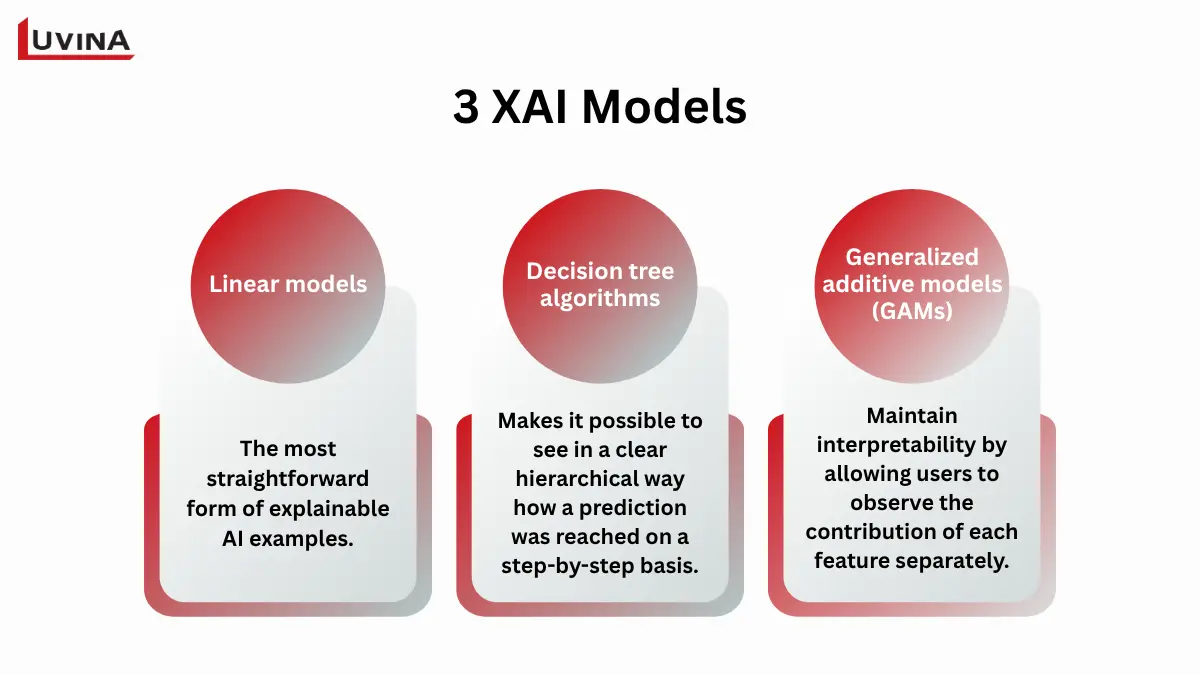

The foundation of model explainability lies in choosing models that are both accurate and interpretable. Below are several XAI models that illustrate how explainability evolves from simple, transparent structures to more advanced systems requiring additional interpretation techniques.

1. Linear models

Linear models, such as Linear Regression or Support Vector Machines (SVMs) with linear kernels, represent the most straightforward form of explainable AI examples. They follow a linear relationship between input features and output predictions – meaning that any change in one variable directly affects the result predictably. The equation form (y = mx + c) makes these models intuitive and ideal for applications where clarity is essential.

2. Decision tree algorithms

In decision trees, you can visualize the decision process in the form of a tree, where tree nodes represent a decision based on a feature or attributes, and the leaf nodes represent an outcome. This structure makes it possible to see in a clear hierarchical way how a prediction was reached on a step-by-step basis. Due to their transparency, decision trees are often used in finance, healthcare, and compliance-based AI systems.

3. Generalized additive models (GAMs)

GAMs extend linear models by incorporating nonlinear relationships through smooth functions. They maintain interpretability by allowing users to observe the contribution of each feature separately. This balance of flexibility and transparency makes GAMs suitable for complex datasets where traditional linear models fall short.ur explainable AIM systems remain compliant, trustworthy, and in step with the industry landscape and ethics.

Toolkits, Libraries & Platforms For Explainable AI

The emergence of explainable machine learning has yielded a proliferation of toolkits and frameworks to facilitate transparency and accountability in AI systems. Appropriately explainable AI tools may be able to improve transparency and build trust between humans and machines, particularly within high-stakes decisions, such as healthcare, finance, and autonomous systems.

| Tools | Description | Key features | Primary focus |

|---|---|---|---|

| InterpretML | Offers both model-specific and model-agnostic interpretability methods for ML models. | Global and local explanationsFeature importance and instance-level insightsWorks with multiple ML frameworks | Model-agnostic/visualization |

| SHAP (SHapley Additive exPlanations) | Assigns fair feature importance using Shapley values. | Unified feature importance metricModel-agnostic SHAP valuesWorks with tree-based and deep models | Model-agnostic/feature attribution |

| LIME (Local Interpretable Model-Agnostic Explanations) | Explains model locally with human-readable summaries. | Localized interpretationsSupport text, image, and tabular | Model-agnostic/local explanation |

| DALEX | Visualizes and validates model performance through consistent interpretation methods. | Consistent interpretability APIModel performance visualizationModel validation and debugging | Visualization/model validation |

| ELI5 (Explain Like I’m 5) | Explains predictions with clear textual output and feature-based importance metrics. | Feature and permutation importanceText-based explanationsCompatible with scikit-learn, XGBoost | Model-agnostic/educational |

| AI Explainability 360 | Provides diverse interpretability and fairness algorithms. | Fairness and bias detectionModel-agnostic toolsSupport for scikit-learn and TensorFlow | Fairness & bias/model-agnostic |

| SHAPash | Enables easy interpretation of model predictions. | Simplified SHAP interfaceFeature contribution visualizationWorks for regression and classification | Visualization/feature attribution |

| Alibi-Explain | Provides model-agnostic explanation techniques | Counterfactual & anchor methodsTabular and image data supportIntegrated with the Alibi library | Model-agnostic/counterfactual |

| FAT Forensics | Analyses model behavior, fairness, and interpretability. | Forensic model inspectionInterpretability metricsBias and fairness evaluation | Fairness & bias/evaluation |

| ExBERT | Interpretes BERT and transformer-based models through attention visualization. | Attention visualizationToken-level interpretationSupport for NLP transformers | NLP-specific/visualization |

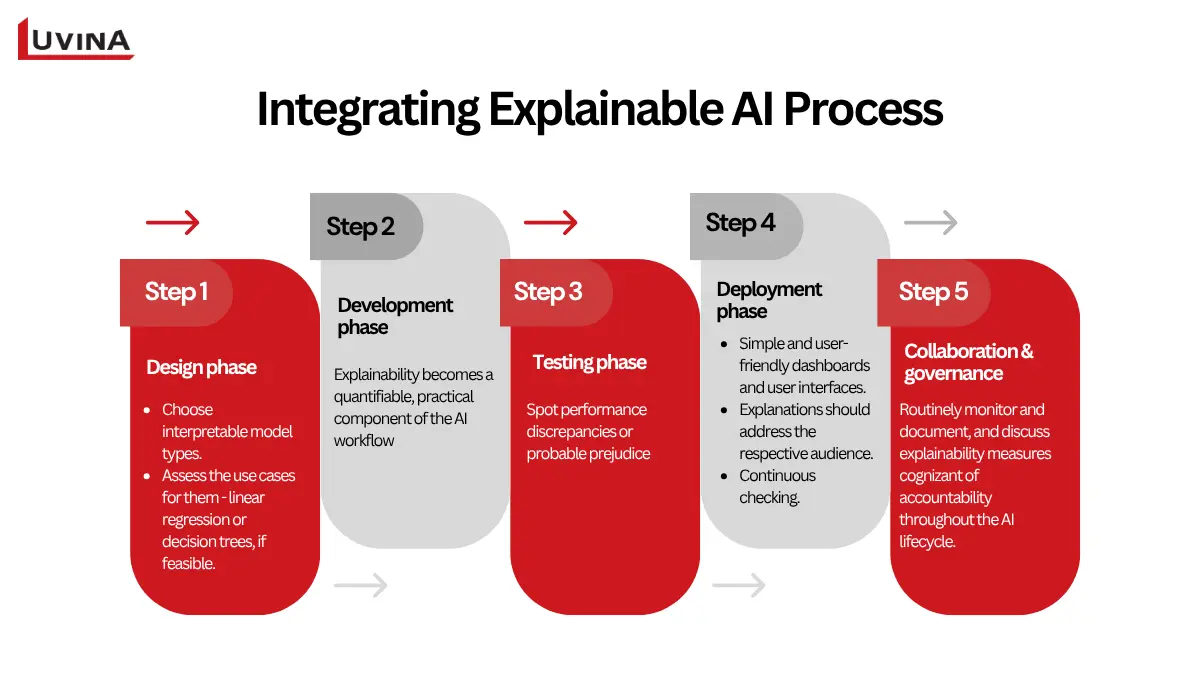

Integrating Explainable AI

Incorporating transparent, moral, and reliable explainable AI across the AI and machine learning life cycle keeps AI systems transparent and reliable. The following is an analysis of how XAI explainable AI might be successfully integrated at every stage of AI product creation.

As the industry moves toward greater automation and regulatory scrutiny, embracing XAI is no longer just a technical upgrade but a strategic necessity. Want to build transparent fintech solutions with Explainable AI? Partner with Luvina to design compliant, auditable, and trustworthy AI systems.

1. Design phase

Defining explainable AI as a fundamental design need at the start of product development is critical. Product teams should choose interpretable model types such as healthcare, finance, or autonomous systems – that is, applications where model transparency is most important – and assess the use cases for them. linear regression or decision trees, if feasible. Early embedding of explainable artificial intelligence XAI concepts also aids in conformance with ethical and legal norms.

2. Development phase

Explainable AI becomes a quantifiable, practical component of the AI workflow during the development phase rather than just a theoretical idea. During development, explainability becomes part of the technical implementation, not an afterthought. Combining naturally understandable algorithms with post-hoc explanation methods like LIME or SHAP will help developers make complicated models more approachable.

Open-source frameworks like Teams may use AI Explainability 360, InterpretML, or ELI5 to watch the contribution of every characteristic to a model’s outcome.

Model documentation and traceability are also crucial: keeping openness depends on documenting which data elements, model parameters, and design choices affect forecasts. During this phase, ongoing bias and fairness checks should also be carried out to guarantee that model behavior stays constant across several demographic categories.

3. Testing phase

During testing, explainable AI techniques are quite helpful since they clarify the reasons behind a model’s behavior. By studying feature attributions and decision paths, developers can spot performance discrepancies or probable prejudice. During this stage, transparent assessment guarantees that the system not only operates effectively but also behaves morally and predictably.

4. Deployment phase

Once launched, explainable artificial intelligence is crucial for keeping openness in actual applications. The emphasis at this point moves to clearly explaining to various kinds of consumers.

- Dashboards and user interfaces must exhibit artificial intelligence decisions in a simple, visual, and user-friendly way.

- Explanations should address the respective audience; end-users may want rapid, straightforward language ideas, while data scientists may require explicit statistical reasoning.

- Continuous checking to notice model drift or explanatory inconsistencies as data enters the system.

5. Collaboration & governance

Successful explainable AI integration requires collaboration among data scientists, domain experts, ethicists, and policymakers to ensure explainability balances technical, ethical, and legal aspects. It is good practice to routinely monitor and document, and discuss explainability measures cognizant of accountability throughout the AI lifecycle. As global frameworks like the EU AI Act emphasize transparency and bias mitigation, explainable AI plays a key role in ensuring ethical and compliant AI systems.

Challenges, Pitfalls & Common Misconceptions

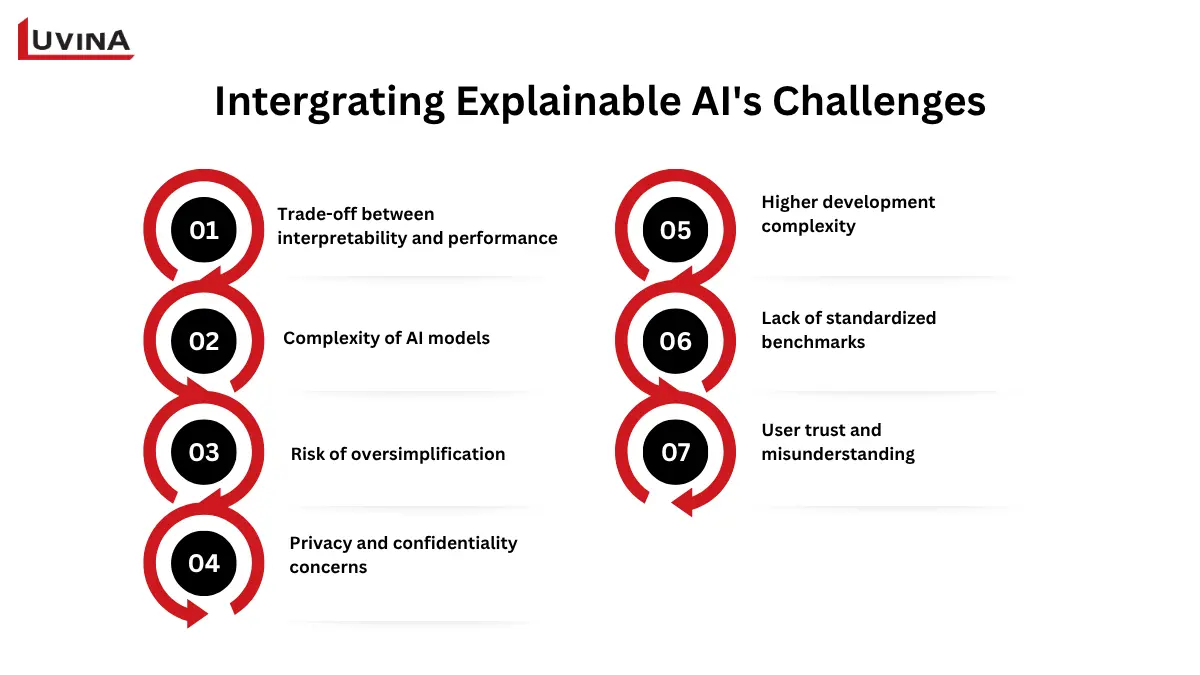

Although explainable AI increases accountability and openness, its deployment still has many difficult issues that lie outside of technical challenges. The main impediments companies sometimes meet while designing or implementing XAI systems are listed below.

Trade-off between interpretability and performance

Simplifying models improves explainable AI but reduces predictive power. Deep neural networks, while accurate, often remain black boxes.

Complexity of AI models

Numerous recent algorithms include complex mathematical relationships that are not easily rendered into human-understandable explanations. Making them transparent could lead to oversimplification, therefore skewing the actual process of decision-making.

Risk of oversimplification

Some approaches of explanation oversimplify model reasoning and so lead to misreadings. Although simplification aids non-experts in grasping results, it can also cover the fundamental complexity and produce mistakes in how decisions are regarded.

Privacy and confidentiality concerns

Rising clarity might reveal sensitive information or patented algorithms. Over-disclosure of how an XAI system works may expose trade secrets or user data, raising compliance and security concerns.

Higher development complexity

Designing explainable AI models capable of offering unambiguous, understandable insights increases technical and resource complexity levels. Incorporating interpretability systems and validation procedures is crucial for developers, although it can delay implementation and raise expenses.

Lack of standardized benchmarks

The absence of widely recognized measures or definitions of explainability impacts the ability to assess or compare models. What a team might consider “interpretable” helps measure response time, and represents what sector and even country they come from, for example, which leads to different guidelines for execution.

User trust and misunderstanding

Even when users are given explanations, they may not trust or even misunderstand them. Users may feel that to explain the outcome of a model means that it is not biased or, most importantly, it is accurate; in fact, that is not the case. Users do not just trust an explanation without rigor, but must have education and clarity about the meaning of correlations.

Emerging Trends & Future Explainable AI

The rise in expectations for transparency, ethics, and trust with rapid developments in artificial intelligence will determine the evolution of explainable AI. As artificial intelligence continues to be integrated into daily life and business, explaining is changing to meet both technical and legal expectations.

One major trend is the movement toward context-specific interpretability, where explanations are customized to each sector, including automation, healthcare, or finance, so users obtain knowledge that is meaningful as well as true. Another approach is the development of hybrid models that combine performance and openness using methods including surrogate modeling and attention visualization to render deep learning more understandable.

Also contributing to the momentum are regulatory frameworks that, recognizing the importance of explainability, are pushing companies to incorporate explainability into compliance frameworks, systems, and design. At the same time, applications of explainable AI are advancing into areas such as multimodal and generative AI, where new methodologies will be required for analyzing unstructured data, like speech, text, and images, etc.

Looking forward, the future of explainable AI is contingent upon working together, researching, and collaborating with developers and policymakers. The issue of transparency and responsibility will continue to be a key focus for the next generation of intelligent systems as AI systems continue to be more powerful.

FAQ

What is the difference between Explainable AI and Generative AI?

Explainable AI focuses on making AI decisions transparent and understandable, showing how and why a model produces a specific result. In contrast, Generative AI (like ChatGPT or DALL·E) creates new content such as text, images, or audio.

While explainability can be applied to generative systems, most generative AI models remain “black boxes,” offering limited insight into their internal reasoning.

How is interpretability different from explainability?

Interpretability means understanding the cause behind an AI model’s output – how input changes affect results. Explainability goes deeper by describing the reasoning process behind those decisions, helping users grasp why a particular outcome occurred.

How does Explainable AI relate to Responsible AI?

Explainable AI ensures AI decisions are understandable after results are generated, while Responsible AI focuses on designing ethical, transparent, and fair algorithms before deployment.

Conclusion

In summary, from fundamental principles and model kinds to evaluation indicators, toolsets, and deployment techniques, we investigated the underpinnings, techniques, and practical applications of explainable AI throughout this article. XAI not only improves model transparency but also increases user trust and guarantees fairness throughout sectors, as can be seen. Organizations can transform difficult “black box” systems into understandable and responsible solutions using appropriate methods and tools.

Looking forward, explainable AI will keep changing with developments in automated and deep learning. Explainability will be a benchmark for developing trustworthy, moral, and human-centered intelligence as artificial intelligence systems more and more impact major decisions in healthcare, banking, and governance.

Want to make your AI systems transparent and compliant?

Request a free XAI readiness checklist or consult with our AI ethics team.

Resources

- https://www.ibm.com/think/topics/explainable-ai/

- https://www.techtarget.com/whatis/definition/explainable-AI-XAI

- https://builtin.com/artificial-intelligence/explainable-ai

- https://ifmas.csc.liv.ac.uk/Proceedings/aamas2021/pdfs/p45.pdf

- https://www.qlik.com/us/augmented-analytics/explainable-ai

- https://baotramduong.medium.com/explainable-ai-xai-tools-and-libraries-7c9d0c3330be

- https://www.leewayhertz.com/explainable-ai/#Explainability-approaches-in-AI/

Read More From Us?

Sign up for our newsletter

Read More From Us?

Sign up for our newsletter