Even though artificial intelligence is transforming businesses, can we trust what we don’t understand? This question is certainly rooted in the discourse about explainable vs interpretable AI. As models such as deep neural networks become more complex, they often leave people (especially experts) pondering the best way to interpret them and increasingly display “black box” properties.

It is important to note the distinction between interpretability and explainability not only from an intellectual position but also to support the development of reliable, trustworthy, and ultimately deployable AI systems. This article will address the key distinctions between interpretability and explainability, and explain when and why each is important.

What is Interpretable AI?

Interpretable artificial intelligence is a type of AI that enables individuals to understand how a model generates its predictions. In interpretive artificial intelligence, unlike in black-box models where the path to decision-making is hidden from view, users can see associations between inputs and outputs, allowing for validation, debugging, and trusting the system.

Some models – like linear regression, decision trees, or rule-based systems – are naturally clear; others employ post-hoc interpretation. Strategies for clarifying more difficult models following training.

Interpretable artificial intelligence is fundamentally necessary in controlled sectors, including banking, healthcare, and legal services. Interpretability is fundamental within the bigger debate of explainable vs interpretable AI, enabling practitioners to grasp how AI functions before using other explainability methods.

What is Explainable AI (XAI)?

A technique called explainable artificial intelligence (XAI) helps people understand complicated models of artificial intelligence by explaining the causes for a model’s prediction. XAI, moreover, helps to highlight which features or data points are the most influential in the output of a model.

In practical applications, the potential influence of XAI is significant. With a medical diagnosis, for instance, it can show physicians what specific patterns in an X-ray produced a prediction for a diagnosis, thus building trust and improving accuracy. In the case of self-driving vehicles, XAI justifies whether a vehicle braked or swerved around a pedestrian, so that passengers or authorized persons can understand the rationale for the AI decision.

More importantly, XAI goes beyond the interpretability debate in the context of explainable vs interpretable AI by openly demonstrating not just how a model works, but the reasoning of every decision made by the algorithm.

To understand more about Explainable AI, you can refer to our in-depth article about its core concepts Explainable AI Guide: From Basics to Future Trends

Key differences: Interpretable vs Explainable AI

While the goals of interpretability and explainability are both to enhance transparency of AI, they pursue that goal in different ways. Concerning their approach, methodology, scope, and which types of AI models they best fit, interpretable vs explainable approaches offer different ways of enhancing transparency. Below summarizes the major distinctions of explainable vs interpretable AI:

| Aspect | Interpretability | Explainability |

| Model transparency | Provides insight into the model’s internal logic and structure | Focuses on explaining why a specific decision was made |

| Level of detail | Offers a detailed, granular understanding of each component | Summarizes complex processes into simpler, high-level explanations |

| Development approach | Uses inherently understandable models like decision trees or linear regression | Applies post-hoc techniques such as SHAP or LIME to explain decisions |

| Suitability for complex models | Less suitable for highly complex models due to structural transparency limits | Well-suited for complex models, since it explains decisions without exposing all internal mechanics |

| Challenges/ Limitations | May reduce model performance or flexibility to maintain transparency | Can oversimplify results or miss intricate model interactions |

| Use cases/ Applications | Ideal for applications requiring full transparency, e.g., credit scoring, healthcare diagnostics | Useful in complex AI systems like deep learning models, self-driving cars, or large-scale recommendation engines |

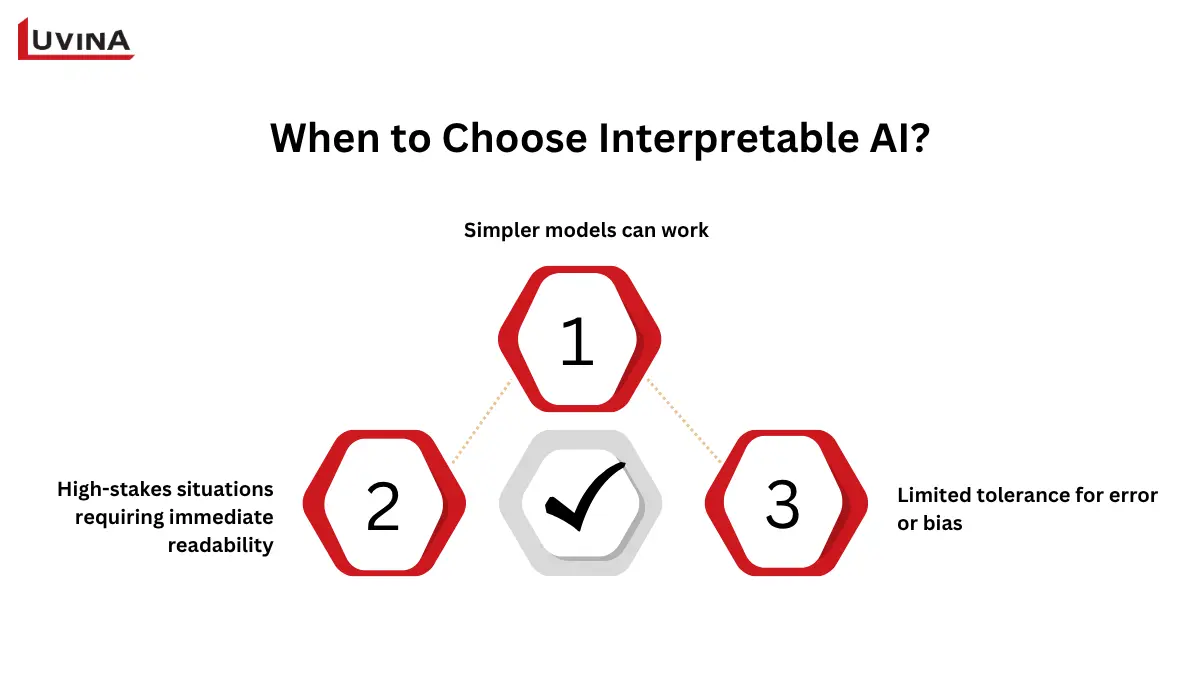

When to choose Interpretable AI

Deciding the best strategy requires knowledge of where explainable vs interpretable AI provides the most advantage for dependability, clarity, and legal compliance. Interpretable artificial intelligence is ideal in the argument of explainable vs interpretable AI when handling extremely complex data is less crucial than simplicity and openness.

Simpler models can work: As long as the tasks do not involve big data or deep patterns or otherwise, interpretable models such as linear regression or decision trees produce sufficient outcomes and require less concern over the black box systems.

High-stakes situations requiring immediate readability: In high-stakes situations such as air traffic control or medical diagnostics, high-stakes situations require decisions need to be readable immediately by a human operator.

Limited tolerance for error or bias: If reducing errors and discovering bias is needed, interpretable artificial intelligence supports rapid identification and corrective action to build more trust and accountability in the system.

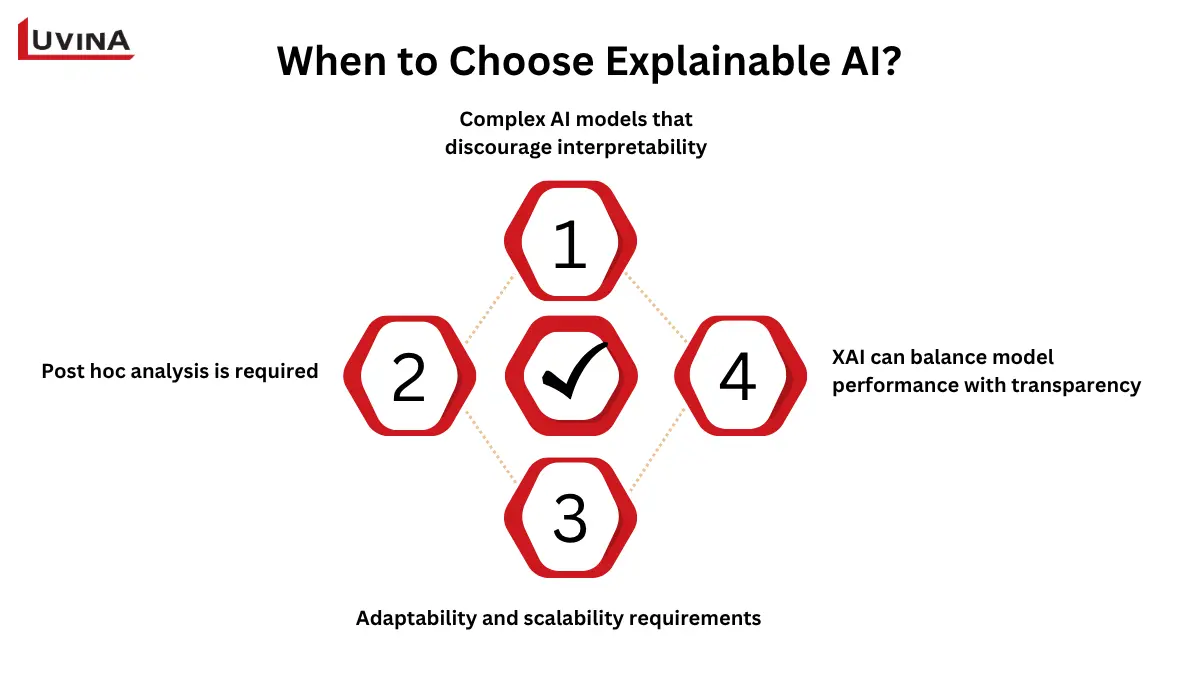

When to choose Explainable AI

In the discussion of explainable vs interpretable AI, explainable AI (XAI) is the preferred choice when dealing with complex models that cannot be easily interpreted.

Complex AI models that discourage interpretability: AI systems may deal with highly complex patterns in data that cannot easily be managed if no or a simple model is available (and you do not want to handle data simplistically). XAI provides a pragmatic way to interpret data without simplifying your model.

Post hoc analysis is required: In circumstances where judgments require once we have to evaluate or examine the results/calculate after the fact, XAI is a way of clarifying the results and making recommendations based on the particular results obtained.

Adaptability and scalability requirements: XAI provides transparency while enabling scalable and flexible infrastructures in cases of AI systems needing to be rapidly developed in the presence of new data or changing environments.

XAI can balance model performance with transparency: By providing explanations to ensure transparency and accountability, and by assisting adapted machine learning models (e.g., high-performing models in adaptive cybersecurity and algorithms that can change within markets in real-time), XAI can offer high-performing recommendations that would be expected in an optimal healthcare setting or algorithms that provide home diagnostics.

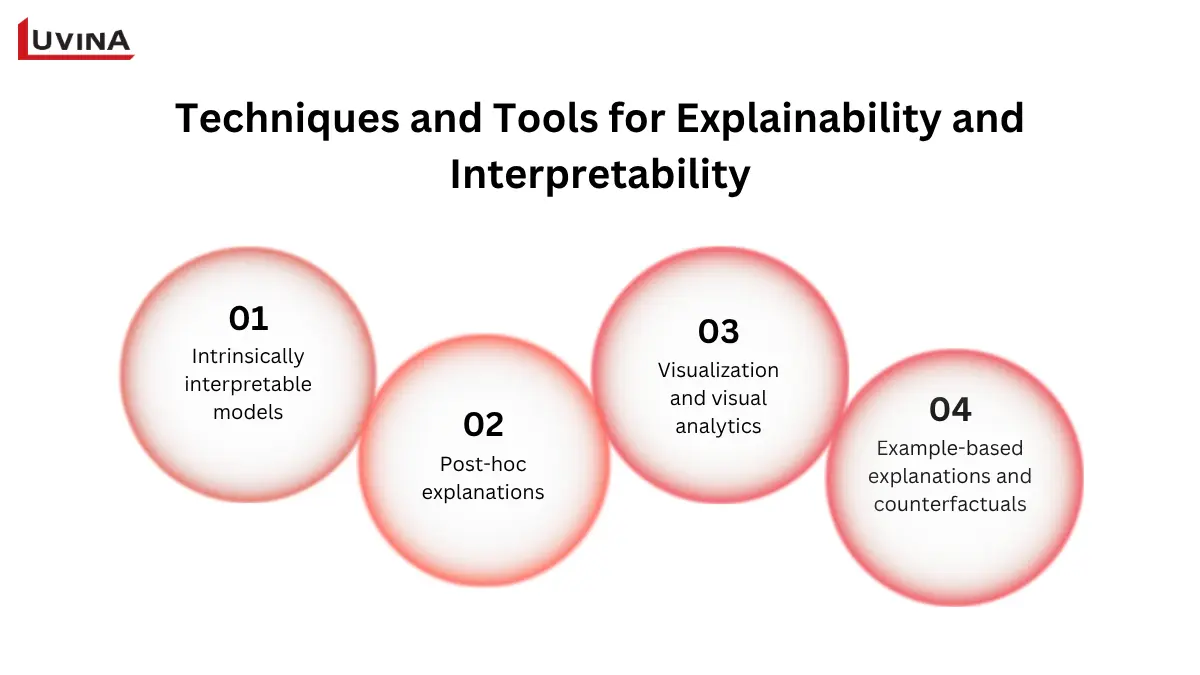

Techniques and tools for Explainability and Interpretability

The difference between explainable vs interpretable AI helps direct which methods are most suited in conversations on transparency in artificial intelligence, depending on model complexity and stakeholder needs.

As the industry moves toward greater automation and regulatory scrutiny, embracing XAI is no longer just a technical upgrade but a strategic necessity. Want to build transparent fintech solutions with Explainable AI? Partner with Luvina to design compliant, auditable, and trustworthy AI systems.

Intrinsically interpretable models

These patterns are meant to be naturally understandable. While linear models weight features to demonstrate how inputs impact predictions, decision trees, for instance, display choices in a clear hierarchical order. Although they are very transparent, their performance may occasionally be below that of more sophisticated designs.

Post-hoc explanations

Following the completion of a model’s training, there are various approaches to help better understand the knowledge that the model holds. For example, SHAP (Shapley Additive Explanations) aims to calculate the contribution of each feature to the given outcome, while LIME (Local Interpretable Model-agnostic Explanations) can provide a local explanation of behavior in the model with regard to the specific predictions being made.

Visualization and visual analytics

Stakeholders may view the relationships between inputs and outputs using tools like feature relevance graphs, partial dependence plots, and individual conditional expectation (ICE) charts. These models assist in spotting patterns, prejudices, or unforeseen actions.

Example-based explanations and counterfactuals

Counterfactuals create “what-if” scenarios demonstrating model behavior, while example-based explanations use actual instances from the dataset to show model behavior. These strategies help to make difficult models more understandable and clear.

Business impact: Trust, compliance & performance

Trust, justice, and accountability are all necessary for artificial intelligence to be used in commerce. Transparency is especially important in the setting of explainable vs interpretable AI to guarantee that consumers grasp how decisions are made, which increases trust in AI systems.

Another major advantage of explainable vs interpretable AI is regulatory compliance. Rules like the GDPR of the EU demand businesses to give significant justifications for automatic decisions. Adopting comprehensible and interpretable artificial intelligence helps companies satisfy these demands while showing responsibility and accountability.

Furthermore, explainable vs interpretable AI supports model debugging and performance enhancement. Showing how models make decisions assists data scientists in identifying errors, biases, and inefficiencies, thus enabling cycles of iterative improvements in terms of accuracy and efficiency. In sensitive applications like hiring, loaning, or policing, fairness and bias mitigation are truly paramount. Transparent AI promotes more ethical and equal outcomes by spotting and mitigating bias.

At last, clear artificial intelligence increases consumer satisfaction and participation. Interactions become more significant when consumers can grasp artificial intelligence judgments, therefore improving experiences and increasing confidence in the system. Highlighting explainable vs interpretable AI lets companies realize these advantages while maximizing ethical standards and performance.

Industry applications & use cases

The use of interpretable vs explainable methods guarantees transparency and trust of artificial intelligence models, particularly to inform decisions in high-stakes situations. Important real-world examples include:

Healthcare

Explainable artificial intelligence contributes to informing the clinical decision-making process that emphasizes the reasons associated with the diagnosis or treatment recommendation.

Example: A model predicting a patient readmission risk can provide a set of important variables associated with medical history, demographics, and social determinants of health.

Finance

Explainable vs interpretable AI identifies the transactional profile variables that led to the identification of suspicious behavior around potential fraud in payments and informs the investigator which variable(s) were involved in the decision at the moment.

Example: Provides transparency around credit decisions when the algorithm is explicit about what factors influenced the credit score.

Customer service & recommendations

AI tools that provide consumers with customized recommendations let them grasp their recommendations, therefore increasing confidence and satisfaction.

Example: Show why particular items or services are suggested depending on user actions and preferences.

Regulated industries

High-stakes fields like healthcare, insurance, or criminal justice profit from explainable artificial intelligence since it satisfies compliance standards and offers clarity for auditing purposes.

Future directions

Applications of emerging use cases include user interfaces to an interactive model that borrows measurement principles from potential outcomes for assessing model behavior, improved natural language explanations, and more traditional variables for evaluating the quality of artificial intelligence explanations. Emerging uses are in addition to existing uses since they will enhance the accuracy, reliability, usability, and understandability of artificial intelligence systems.

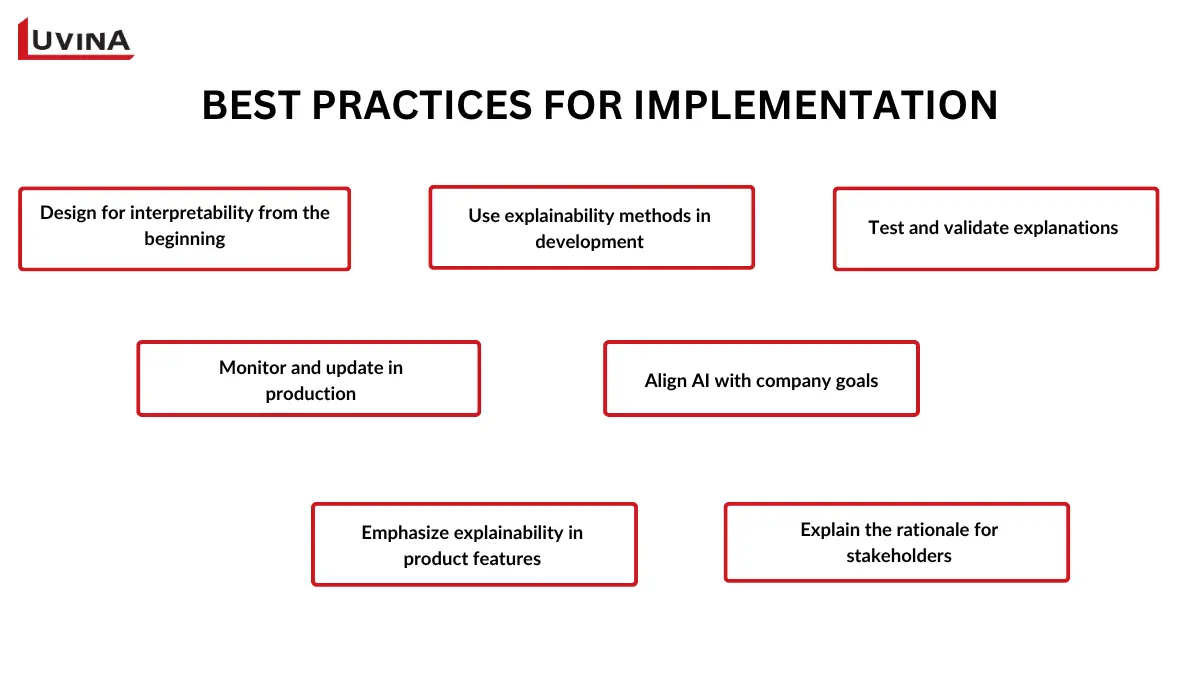

Best practices for implementation

Implementing transparent and reliable artificial intelligence systems requires a calculated approach. Following these practices ensures that explainable vs interpretable AI is effectively implemented:

Design for interpretability from the beginning: Develop detailed objectives for explainable vs interpretable AI that consider stakeholder expectations and use cases.

Use explainability methods in development: Use inherently interpretable models when possible; post-hoc explanations like SHAP or LIME for complicated models; and visualization tools.

Test and validate explanations: Set up strict processes to guarantee that descriptions properly represent the decision-making of the model.

Monitor and update in production: Track model performance, data changes, and explanation correctness constantly in production.

Align AI with company goals: Focus features of explainable vs interpretable AI based on their relevance to business goals such as increased consumer trust, compliance requirements, or quality of decisions.

Emphasize explainability in product features: Aspects of criteria, point out aspects of explainability and transparency that warrant a focus in design and development of products, such as decision support systems, recommendation engines, or predictive analytics tools. Make AI outcomes interpretable via interactive visuals, natural language explanations, or example-based reasoning.

Explain the rationale for stakeholders: Show clients, policy makers, and in-house teams that transparent, fair, and accountable AI is valuable. Providing a tool and training also helps develop a culture of responsible AI development and increases confidence in explainable vs interpretable AI systems.

Conclusion

Throughout this article, we examined the fundamental distinctions between interpretable and explainable artificial intelligence, emphasized when to employ each method, reviewed major technologies and instruments, and highlighted practical applications in several sectors. We also talked about the best techniques to apply transparent, reliable, and business goal–aligned artificial intelligence models.

If you want to know how to maximize the advantages of reliable artificial intelligence systems and how to properly use explainable vs interpretable AI in your company, contact Today Luvina for specialized solutions and professional help.

Resources

- https://www.splunk.com/en_us/blog/learn/explainability-vs-interpretability.html

- https://www.techtarget.com/searchenterpriseai/feature/Interpretability-vs-explainability-in-AI-and-machine-learning

- https://www.linkedin.com/pulse/xai-explainable-interpretable-ai-guide-business-nitin-bhatnagar-kkohc/

- https://shelf.io/blog/interpretable-ai-or-explainable-ai-which-best-suits-your-needs/

Read More From Us?

Sign up for our newsletter

Read More From Us?

Sign up for our newsletter